Getting access to Zendesk’s Google Cloud and Artifactory from GitHub dotfile repos

At Assetnote, we’re a small team and all of us come from an InfoSec background and part of our product development is our team actively monitoring trends in the causation of organizational exposures and breaches. One common pattern we’ve identified is employees and contractors of organizations accidentally leaking their sensitive data such as credentials, source code and even raw customer PII on third-party platform sites like GitHub, PasteBin and Trello.

In this article we’re going to talk specifically about exposures on GitHub - these are typically made by employees/contractors of a technical discipline and are more likely to contain credentials with access to infrastructure. Git can be tricky to get right, and while a lot of the cases we’ve disclosed via vulnerability disclosure programs are due to credentials being left in a historical commit (some even labelled “Remove credentials”), in some cases credentials are even left in the HEAD of the master branch.

Today we’re announcing our Third-Party Platform Exposure module, which will be available for the Assetnote continuous security (CS) platform later this year, and disclosing some of the most critical findings we’ve found benchmarking against public bug bounty targets.

Zendesk’s Google Cloud and Artifactory

On February 15th, after launching for a beta test, our TPPE monitor detected two public repositories containing Zendesk employee dotfiles, a repository typically used by a developer for easy portability of their CLI and SDK settings.

Looking at the first repository, our TPPE monitor reported to us that two common formats of credentials were present in the repository, an artifactory token for a repository hosted with JFrog and a GCloud “legacy-credentials” folder.

Upon inspection by our team, we determined that these credentials were valid. We were able to confirm the Artifactory token by making a request to the hostname in the environment variables with the username and token listed:

view rawzendesk_artifactory_1.sh hosted with ❤ by GitHub

We also validated the Google Cloud credentials. It’s not particularly straightforward to make an authenticated request to the Google Cloud API by cURL with the legacy credentials format, but we found by simply using the gcloud configuration folder in the repository in our local shell, it meant we could use the gcloud CLI as if we were the user.

view rawzendesk_gcloud_1.sh hosted with ❤ by GitHub

These are some of the 150~ projects that the users credentials had access to. The project names have been slightly altered but are in essence what the projects were.

For those unfamiliar with Google Cloud, it makes management of SSH access really easy by removing the necessity to add all authorised users SSH keys to boxes, and instead allows you to SSH to instances using

view rawzendesk_gcloud_2.sh hosted with ❤ by GitHub

Although we did not test this upon discovery of the bug, investigation after showed this would have been possible for externally reachable instances using the command.

In the second repository, our TPPE monitor highlighted similar looking artifactory credentials with a different type of token. Using an updated proof of concept, we were able to prove that these were valid also:

view rawzendesk_artifactory_2.sh hosted with ❤ by GitHub

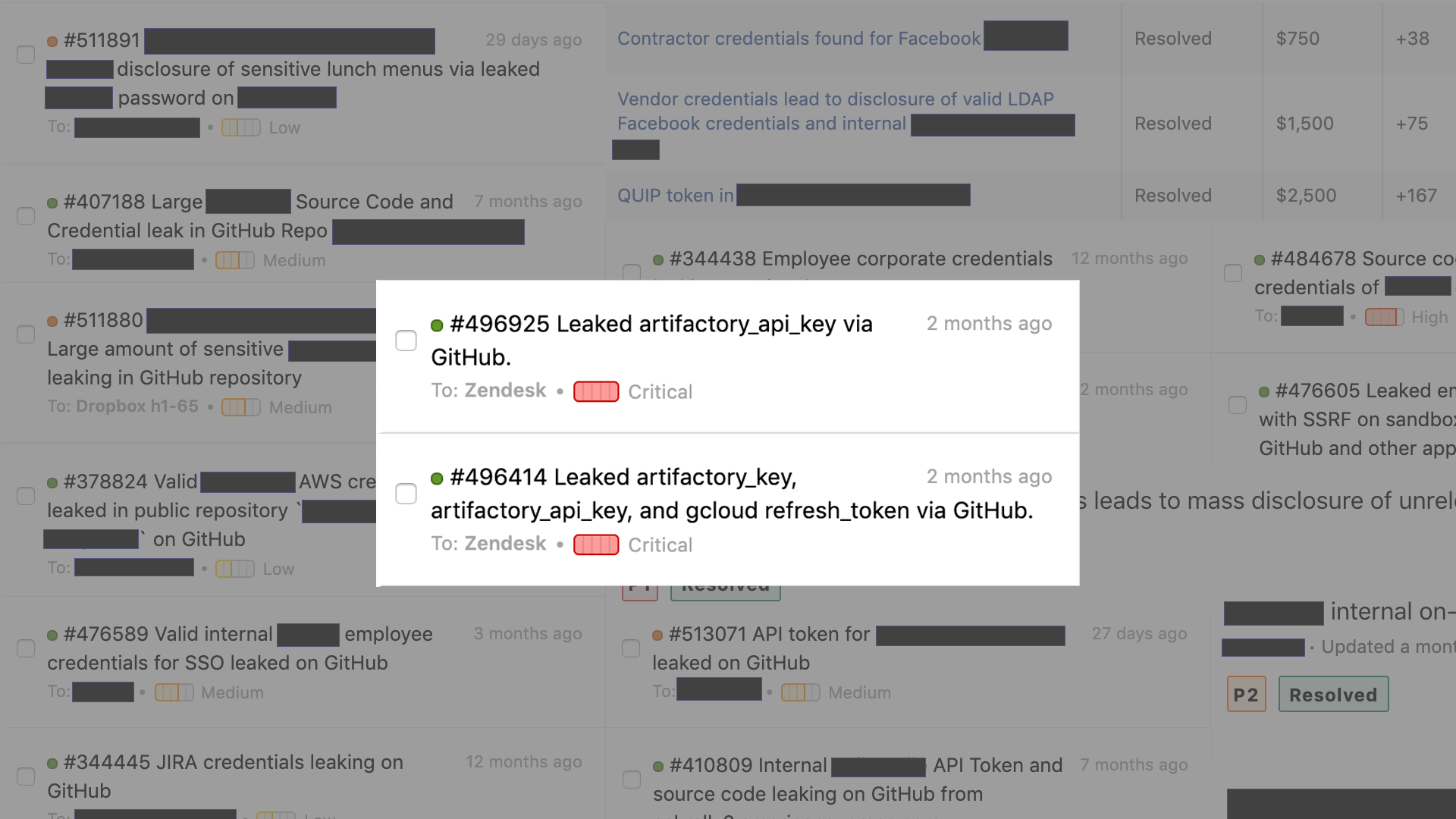

Zendesk immediately revoked all tokens and had both repositories removed within around 12 hours and acknowledged after doing so. They generously paid their maximum bounty ($3000) per repository due to the critical nature of this vulnerability, and clarified they’ve taken measures to prevent similar issues in the future. For a clearer timeline, the HackerOne reports are disclosed and can be read here:

- #496414 - Leaked artifactory_key, artifactory_api_key, and gcloud refresh_token via GitHub

- #496925 - Leaked artifactory_api_key via GitHub

Takeaways

Both of these GitHub repositories were leaked accidentally, and it’s not the first time something like this has happened. As you saw in the header, we’ve reported similar bugs before with a variety of impacts organization to organization, and bug bounty hunters are also on the hunt too (see this report where $15,000 was paid for a GitHub Enterprise API token).

We recognise that it’s impossible to ensure issues like these never occur, and believe the best approach is continuous monitoring to identify such potential leaks as they occur. Our solution, Third-Party Platform Monitoring, will launch later this year and tackle this issue directly by using our asset discovery techniques to identify what to monitor and show exposures with a high degree of confidence using both pattern detection and human augmentation.

For bug bounty hunters, public tools like michenriksen/gitrob are really cool and a great addition to your toolchain if you want to start looking for issues like these. Credentials aside, looking at what a target makes public on GitHub intentionally or not can be very valuable to your research.

For organizations, we recommend asking your employees to be careful what they make public. In our experience the majority of leaks have been in repositories that were intended to be public, but had credentials in due to a slip up, a bad .gitignore or even in a plainsight “Removing secrets” commit due to a misunderstanding of how git and GitHub work.

More Like This

Ready to get started?

Get on a call with our team and learn how Assetnote can change the way you secure your attack surface. We'll set you up with a trial instance so you can see the impact for yourself.